The introduction of AI has made mobile app development easier than ever, thanks to OpenAI’s powerful APIs, leading the way in integrating sophisticated AI capabilities into iOS apps. Whether you’re developing a chatbot, content creator, or intelligent assistant, implementing OpenAI APIs into your Xcode solution can optimise your app. IOS developers must learn to integrate OpenAI’s key AI capabilities into their Xcode apps to take advantage of cutting-edge AI features.

Understanding the Challenge

Using OpenAI API keys in iOS apps has many drawbacks that developers must consider carefully. Server programs can store API keys in environment variables or configuration files without fear of retaliation, but mobile apps carry a security risk associated with exposing client keys. This fundamental issue affects all decisions that need to be made during the integration process.

The primary concern is the security of API keys. Mobile apps are delivered to end clients, and there is a theoretical possibility that an attacker could decompile the app and obtain the embedded secrets. This flaw could lead to unauthorised use of the API, unexpected billing, and potential abuse of the service.

Security-First Approach

The most important part of integrating with OpenAI is to use a security-focused strategy. Never include the OpenAI API key in your app’s source code or configuration files. While this may seem convenient and straightforward, doing so can put your OpenAI account at serious risk. It could even leak all your data.

The gold standard for secure integration is to implement a backend proxy. This architecture places your server between your iOS app and the OpenAI API, hosting the API key in its secure infrastructure. Your iOS app only communicates with your backend, which forwards requests to OpenAI with the API key securely stored.

This proxy solution provides several security benefits. It gives better control over API usage, enables logging and monitoring of requests, supports response caching to save costs, and allows custom business logic or content filtering to be applied before requests reach OpenAI.

Step-by-Step Integration Process

1: Set Up Your Backend Server

Create a secure backend service that will be a proxy between your iOS app and OpenAI. This server will securely store your API key and handle all direct communication with OpenAI servers.

2: Configure Xcode Project Settings OpenAI

Open your Xcode project and go to the Info list. Add the necessary network permissions and configure App Transport Security to allow HTTPS connections to your internal server.

3: Create Network Service Layer

Implement a separate Swift service class for all API interactions. The service should handle request formatting, response parsing, and Xcode error handling.

4: Implement API Request Methods

Create methods for the various endpoints provided by OpenAI, including error handling and response handling for methods like ending a chat or generating text.

5: Add User Interface Components

Plan for high-performance UI components that will appropriately handle loading states, progress indicators, and post-processing of AI-generated content.

Implementation Considerations

Preparing your Xcode project for OpenAI integration starts with creating the necessary network permissions and security settings. iOS apps require explicit permission to use the network, and you need to configure App Transport Security to allow HTTPS connections to OpenAI servers. When integrating the OpenAI AI Key into your app in Xcode, setting these security settings becomes even more critical to protect your app and your users.

Implement a separate services layer to manage all interactions with OpenAI. This architectural design separates the API logic from your app’s user border, making it easier to test and maintain your code. The services layer will be responsible for formatting requests, validating, handling errors, and converting data to the correct format if necessary.

Make your UI responsive to the asynchronous nature of API calls. OpenAI requests can take several seconds to return, especially for complex requests or during peak times. Implement appropriate loading states, progress indicators, and timeout management to ensure a smooth user experience.

Managing API Responses and Performance

OpenAI API responses can vary significantly in size and processing time depending on the nature of the requests. Use efficient response processing to ensure your application is responsive. Consider streaming responses when creating long-form content so that users can view partial results as they are generated without having to wait for final responses.

Use intelligent caching techniques to limit API calls and improve performance. Cache frequently accessed data, such as frequently accessed chat messages or generated text templates, while using proper cache invalidation techniques to keep content fresh.

Error Handling and User Experience

Effective error handling is critical to ensuring a good user experience when managing external API dependencies. The OpenAI API can return different errors, from authentication to rate-limiting and server errors. Each type of error requires appropriate handling and notification to the user.

Create elegant fallbacks for when API calls fail. This may include serving cached content, limiting functionality, or giving users another option. Always communicate errors to users in plain language, explaining the cause of the failure and possible solutions.

Use exponential retry logic for transient failures, but avoid retrying too often, as this can worsen rate limiting or increase costs unnecessarily.

Testing and Quality Assurance

OpenAI integration testing involves a comprehensive testing strategy covering functional and non-functional requirements. Test your implementation with various input types and sizes to ensure edge cases are handled effectively. This includes testing with short queries, long inputs, memorable characters, and multiple languages.

Performance testing is essential, especially for applications that involve heavy usage. Test how your application responds to various network conditions, such as slow networks and unstable connections. Ensure that error handling works gracefully when the OpenAI API is down or responding slowly.

Implement feature flags or configuration switches that allow OpenAI features to be turned off in production when issues are detected. This will make it easy to keep your application running even if there are issues with the AI integration.

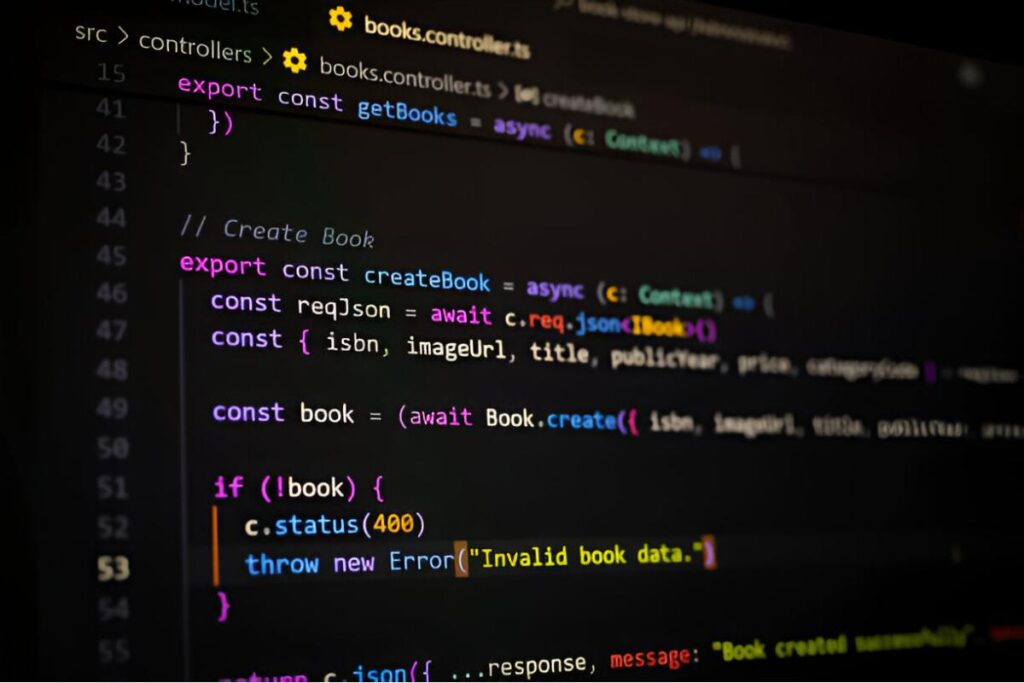

Code Implementation Example

Here’s a basic Swift service class structure for handling OpenAI requests:

Cost Monitoring and Management

To ensure sustainable OpenAI integration, you must implement effective cost management strategies. Monitor token usage patterns to understand your application’s resource consumption and find ways to optimise it. Create alert mechanisms for abnormal spikes that may be a problem.

Consider charging per user or introducing premium tiers for excessive use of AI tool features. This will help control costs while providing value to users who benefit most from the AI capabilities.

Regularly monitor the performance and cost of the integration, refining requests and notifications to improve efficiency. In some cases, minor changes to notifications can significantly reduce token usage without compromising the quality of the result.

Compliance and Privacy Issues

When launching an app integrated with OpenAI, use comprehensive monitoring to track API performance, error rates, and usage patterns. Monitoring allows you to classify issues early and provides valuable information for future optimisation.

Budget for planned maintenance, including monitoring OpenAI API updates, iOS updates, and security patches. Develop options for connecting to AI capabilities and clearly explain how user data is handled when interacting with the AI.

Securely store user data and prevent external APIs from accessing personal information as much as possible. Use data anonymisation or pseudonymisation techniques where appropriate.

Deployment and Maintenance

When launching an app integrated with OpenAI, use comprehensive monitoring to track API performance, error rates, and usage patterns. Monitoring helps identify issues early and provides actionable insights for future optimisation.

Invest in regular maintenance, such as OpenAI API updates, iOS updates, and security patches. The AI ecosystem constantly changes, and staying on top of the latest integration techniques ensures optimal performance and security.

Consider implementing A/B testing frameworks to validate different AI features or develop strategies to improve the user experience based on practical usage patterns iteratively.

Future-Proofing Your Integration

The world of mobile and AI development is constantly changing. Make your integration architecture extensible and flexible to easily adapt to new features from OpenAI or other AI services.

Monitor OpenAI’s roadmap and any new features that might benefit your app. The company constantly updates new models and capabilities to make your app more functional.

Consider the long-term scalability of your integration. As your number of users grows, ensure your architecture can tolerate increased API load and associated costs without impacting performance or user experience.

Conclusion

Successfully leveraging the power of OpenAI in your iOS app requires careful planning, robust security, and thoughtful architectural decisions. By implementing a security-focused solution, using proper error handling, and considering the user experience, you can build robust AI-powered features that will improve user satisfaction while protecting your investment. Remember that adding the OpenAI key to an Xcode app doesn’t end with deployment. Staying competitive and valuable in AI-powered mobile apps requires ongoing monitoring, optimisation, and adaptation to new AI advances.